Vercept builds products that expand human potential while fostering a culture of transparency, growth, and genuine care for the people we serve.

Core Values

These are the guiding principles that define our culture, decisions, and behavior

We lead by example

We believe leadership means serving our team through transparency, shared ownership of outcomes, and creating an environment where people can grow, connect, and thrive—not just produce output.

Collective accountability

We take initiative, own our commitments, and hold ourselves and each other responsible in ways that build trust. We align our work with our bigger mission and communicate with honesty, even when it's difficult.

User-centered impact

We build for accessibility, community, and real value—not perceived value. Our responsibility extends to underrepresented groups, data security, platform stability, and transparent communication that empowers rather than exploits.

Growth through connection

We solve problems together, share knowledge openly, and celebrate each other's wins. We maintain our culture through daily rituals that keep us connected as people, not just coworkers.

Future-focused purpose

We aspire to lead, invest back into education and accessibility, and give back through philanthropy and social good. Every decision we make today shapes the future we want to see.

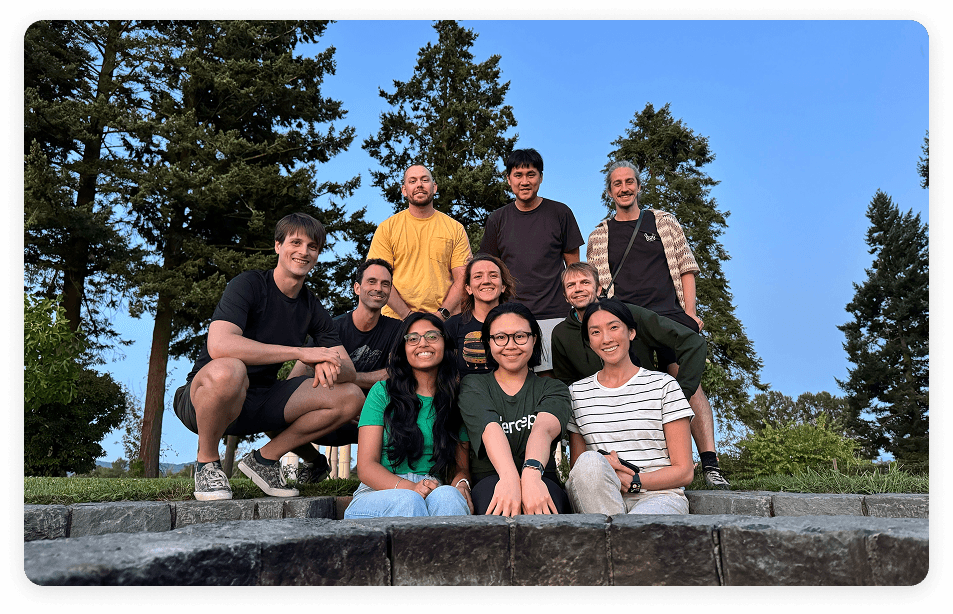

Meet Our Team

Our founding team is world-class in building AI.

Kiana Ehsani

CEO

Previously Senior Research Scientist at AI2, leading research in computer vision, deep learning, and AI agents. Recent best papers at CoRL 2024 (Poliformer), IROS 2024 (HarmonicMM), and ICRA 2024 (RT-X). Ph.D. from UW CSE.

Luca Weihs

Co-founder

Previously Research Manager and Infrastructure Team Lead at AI2. Led work in AI agents and reinforcement learning. Recent work includes PoliFormer, SPOC, and AllenAct. UW Stats Ph.D. and UC Berkeley Math valedictorian.

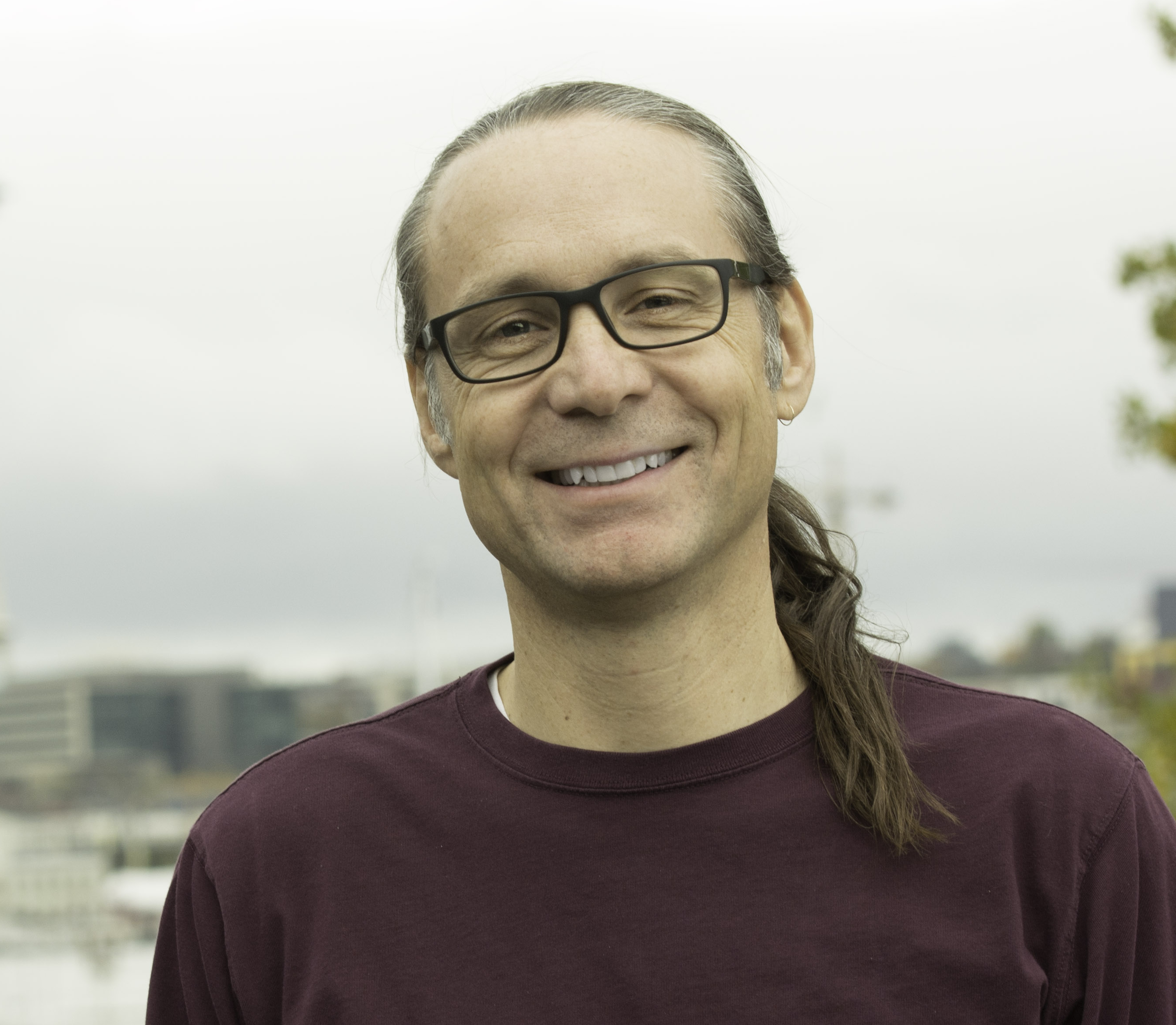

Ross Girshick

Co-founder

The 18th most cited person in the history of science. Pioneered computer vision with deep learning (Faster R-CNN, Mask R-CNN, Segment Anything). Previously Research Scientist at Meta AI and AI2, and Postdoc at UC Berkeley.

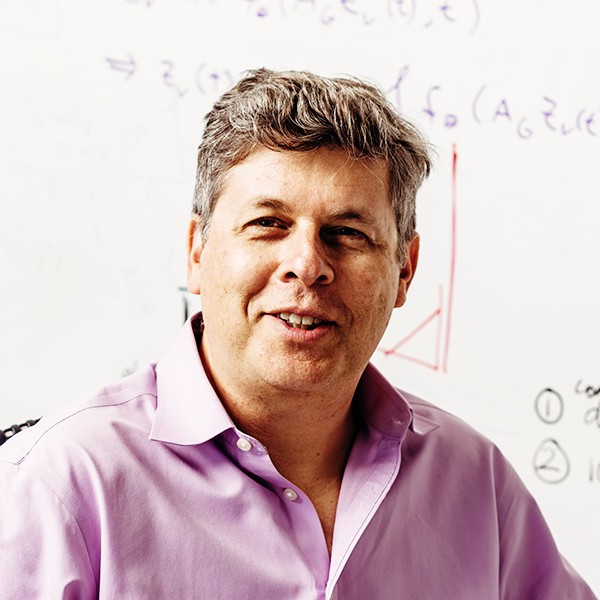

Oren Etzioni

Co-founder

Founding CEO of AI2 (2013-2022). Pioneer in natural language processing and machine learning. Professor Emeritus at UW with h-index of 100+. Founded multiple successful companies including Farecast (acquired by Microsoft).

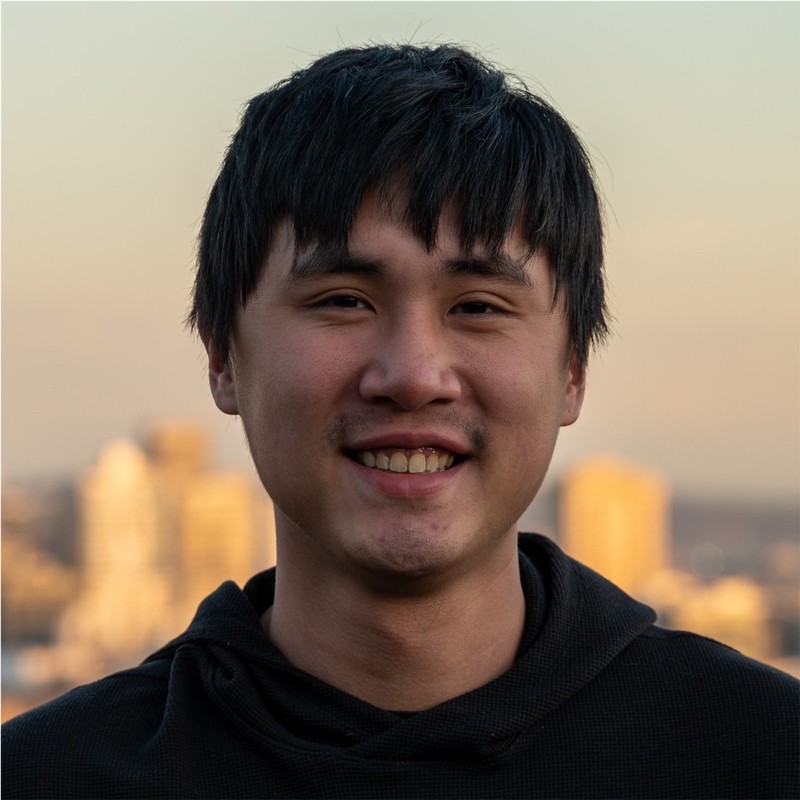

Kuo-Hao Zeng

Member of Technical Staff

Previously Research Scientist at AI2. I train policies with IL/RL in simulation and deploy them to the real world. Led CoRL 2024 Best Paper (PoliFormer). Ph.D. from UW CSE.

Eric Kolve

Member of Technical Staff

Previously, Eric held the position of Principal Software Engineer at the Allen Institute for Artificial Intelligence (AI2), contributing to cutting-edge research in embodied AI and collaborative agent systems.

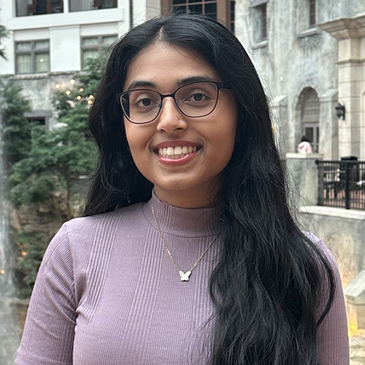

Harshitha Rebala

Member of Technical Staff

Previously an ML research assistant at KurtLab at UW and a SWE intern at Microsoft. Computer Science graduate from the University of Washington.

Huong Ngo

Member of Technical Staff

Previously Predoctoral Researcher at AI2. Led OLMoASR and worked on Molmo and Objaverse. Bachelor's from UW Seattle.

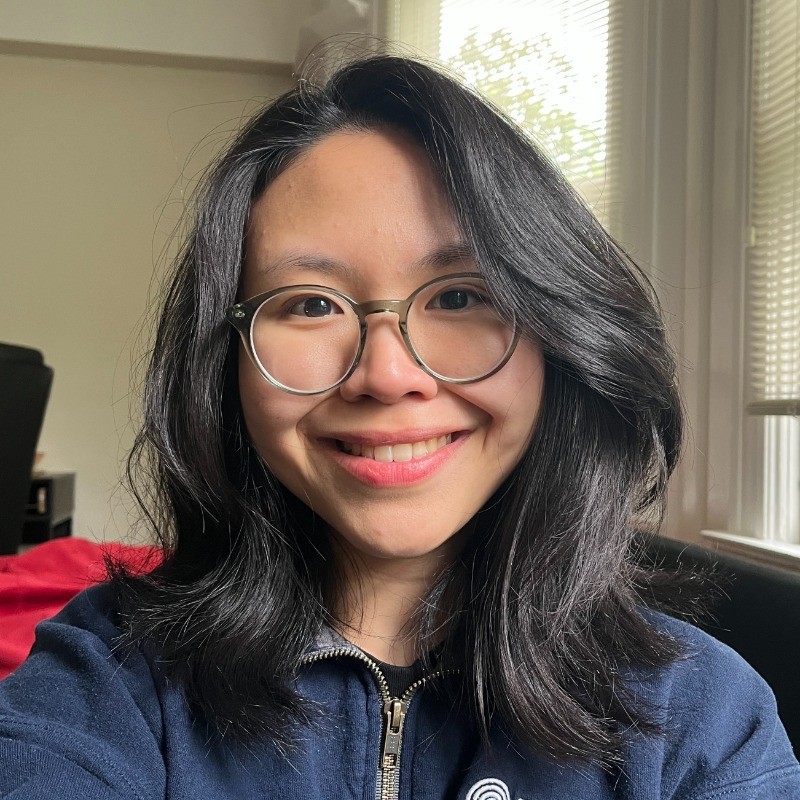

Flora Ku

Head of Operations

Previously built and ran core operational functions at Madrona Venture Labs for both internal team and portfolio companies. MBA from UW Foster School of Business.

Harsh Agrawal

Member of Technical Staff

Previously Research Scientist at Apple MLR building interactive and embodied multimodal agents. Helped build and maintain EvalAI - an open source evaluation platform which has hosted 450+ AI challenges. PhD from Georgia Tech.

Charlie Morss

Member of Technical Staff

Previously Head of Engineering at Copy.ai, scaling a generative AI platform to 17M+ users. Former CTO of NextStep, building technology to address healthcare workforce shortages, and engineering leader at Stripe, where he led work on Sigma and enterprise fraud detection. Earlier CTO roles include Urbanspoon (acquired by Zomato) and Audiosocket.

Jimmy Bales

Operations Manager

Previously Operations Manager at Madrona Venture Labs, where he built and managed community, events, and operational programs supporting a 500+ founder community and portfolio companies.

Cam Sloan

Member of Technical Staff

Cam Sloan is a software engineer and entrepreneur. He previously co-founded Hopscotch, a user onboarding platform for SaaS companies.

You

Dream role

Self-driven and passionate about building cutting-edge AI products. You'll help bring our advanced AI systems to millions of users worldwide.

Help us change the world—

one breakthrough at a time

Think you'd be a great fit? Check out our job board for current openings.